Empty space, drag to resize

Print this newsletter out and talk it through with your colleagues. Clarify your own thoughts, borrow theirs, and see where the conversation goes.

Here we go 🙂

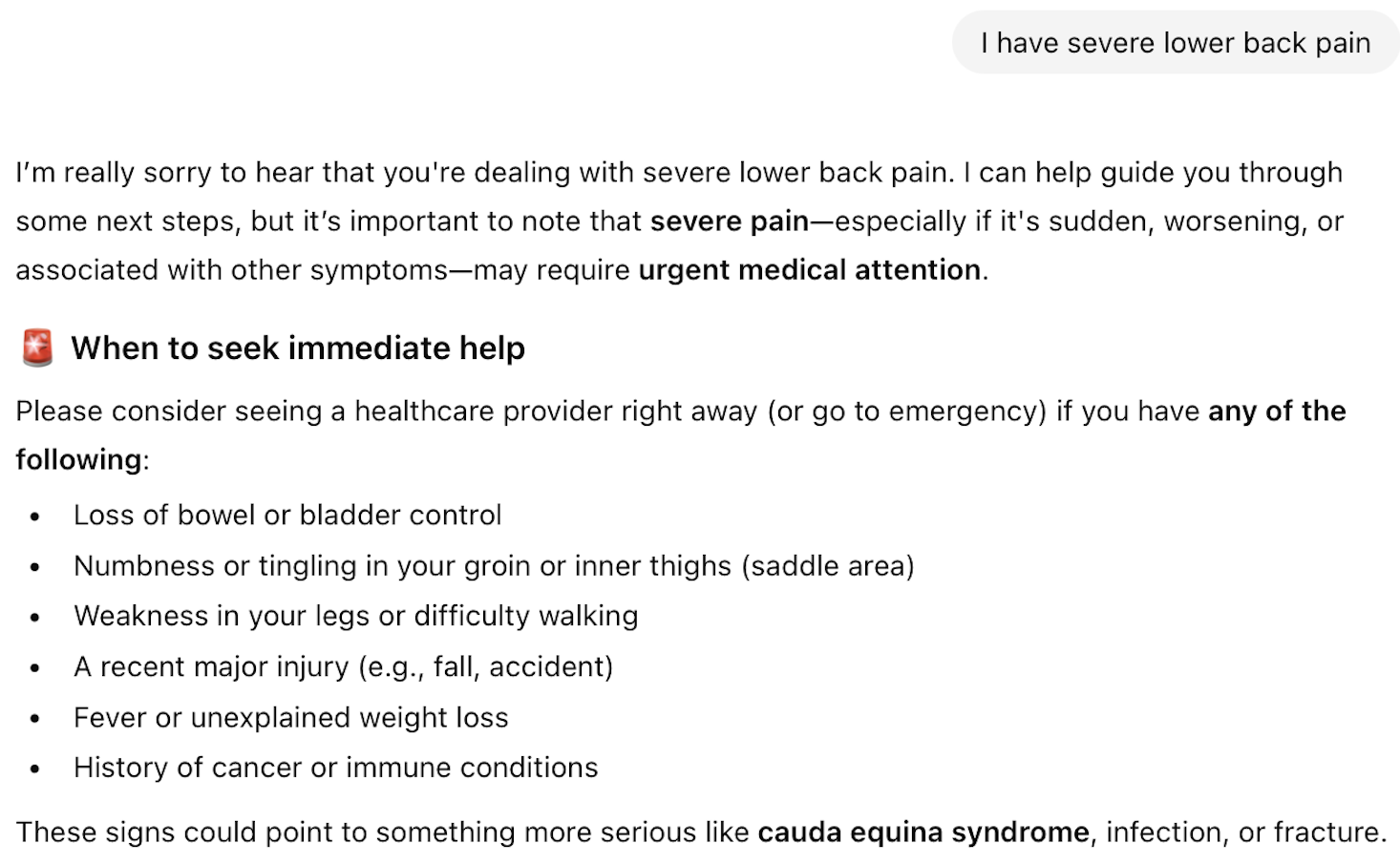

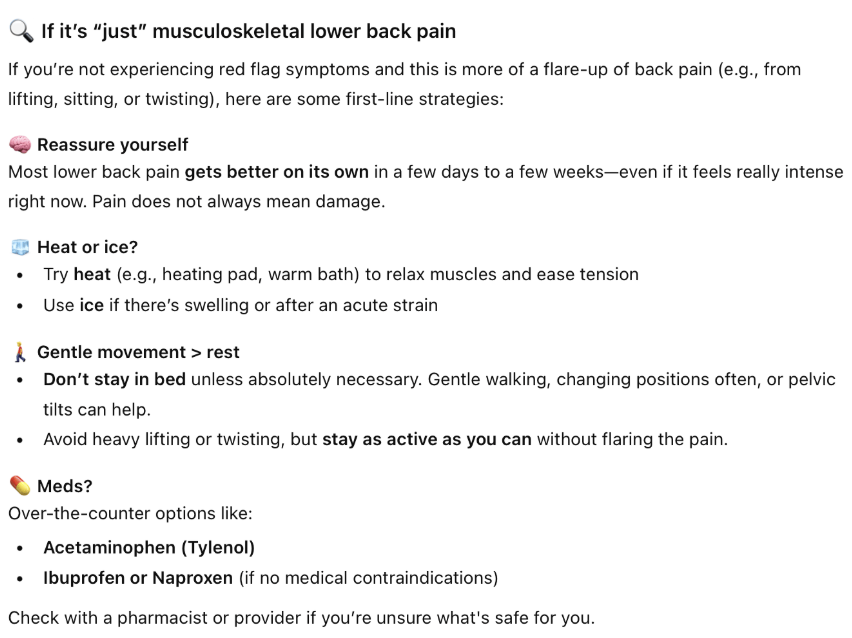

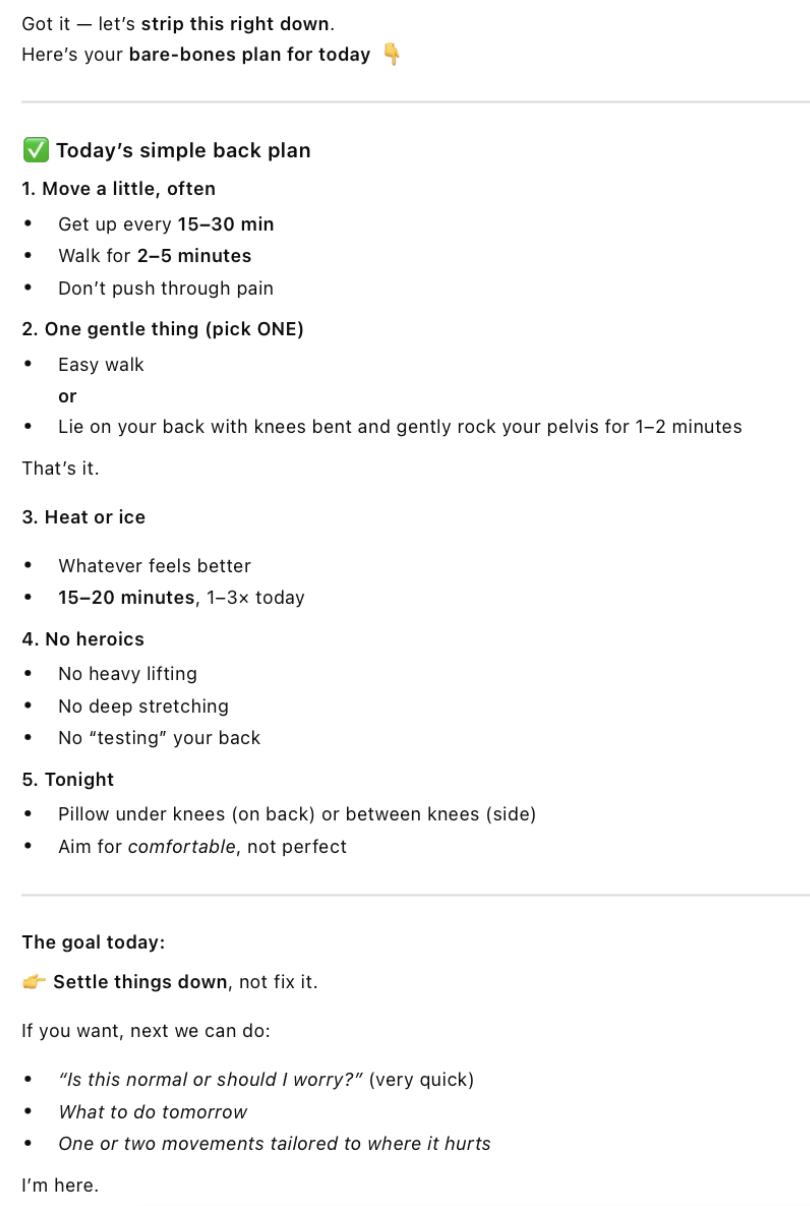

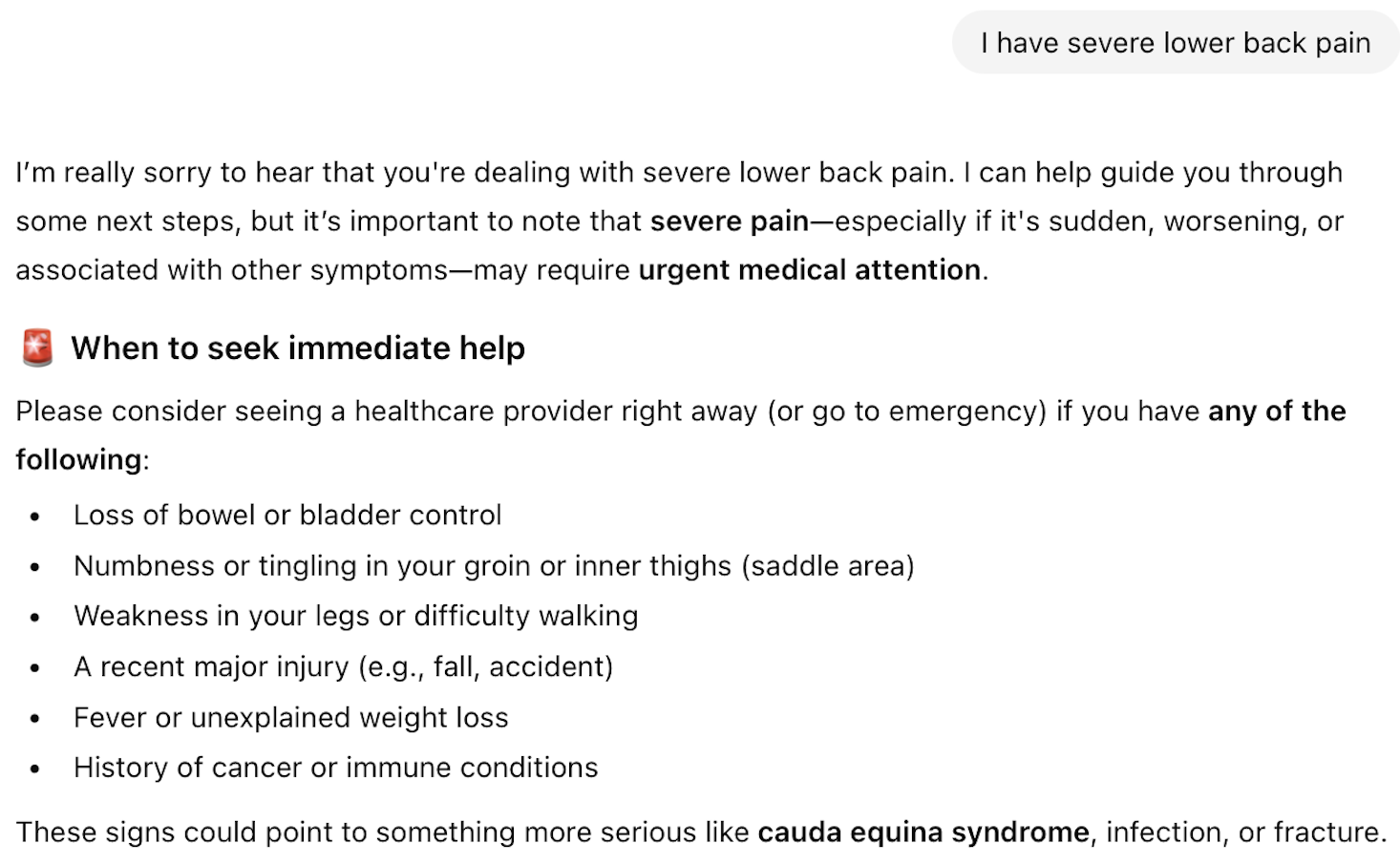

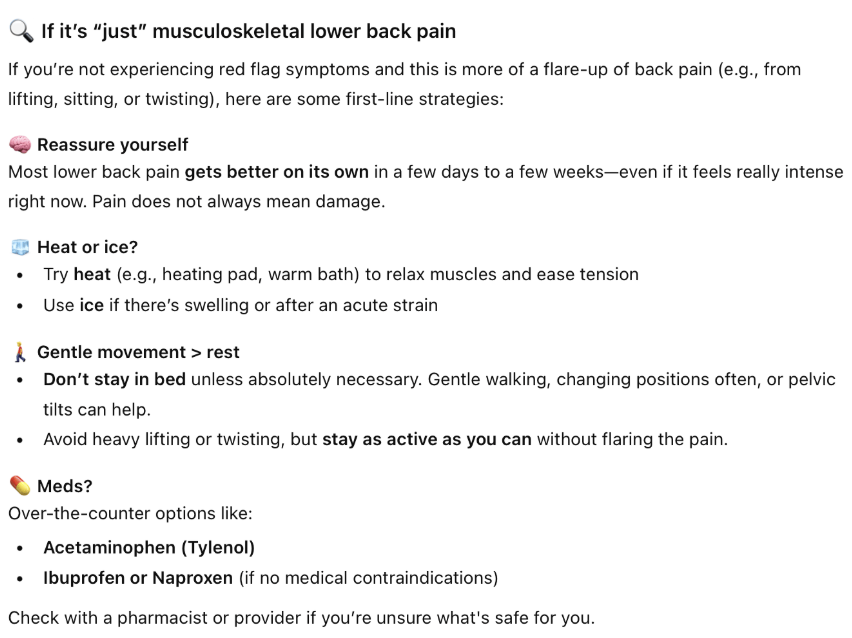

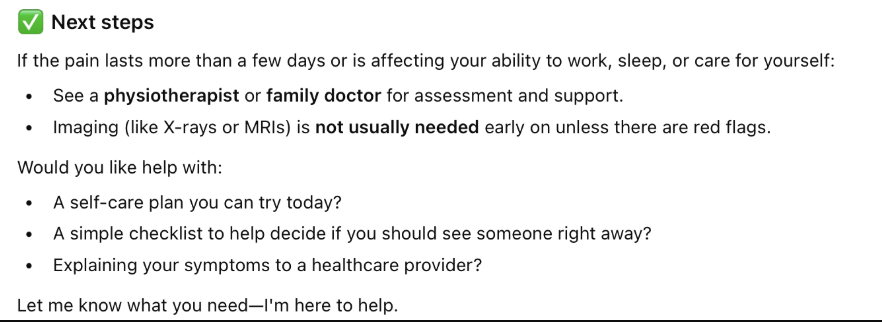

I recently typed something very simple into ChatGPT:

“I have severe low back pain.”

What came back didn’t surprise me.

Its output keeps getting better every month.

It wasn’t alarmist. It was calm and empathetic. It didn’t jump straight to scans or worst-case diagnoses. Instead, it normalized the experience, explained what might be going on, and outlined sensible next steps.

It flagged red flags appropriately, explained pain in clear language, and offered practical guidance. No silly BS. No outdated biomechanical boogeymen. Just good advice.

And let’s not forget, ChatGPT isn’t even a rehab-specific system. There’s no refined MSK dataset. No outcome tracking. No clinician oversight. This is simply a general-purpose transformer.

Which raises an important question:

If general-purpose AI is already this good, and it’s clearly getting better, what happens when people effectively have the best physiotherapist in their pocket?

More importantly:

What does that mean for those of us who do this work every day?

If high-quality education, reassurance, treatment planning, and follow-up become easy, cheap, and always available, then those things stop being differentiators.

So the real reflection isn’t “Will AI replace us?”

It’s:

What value do I provide that makes someone choose to see me, in person, with their time, their trust, and their money?

That’s the question worth sitting with.

When we talk about AI in rehab, it’s easy to drift to the extremes.

On one side: big promises, fancy demos, and bold claims about disruption.

On the other: a quiet urge to put our heads in the sand.

Both reactions make sense to me.

But, like most things worth thinking about, the more interesting conversation sits in the middle.

Purpose-built systems like Hinge Health and Sword Health aren’t trying to replace clinicians outright. They’re in part designed to solve problems humans tend to struggle with consistently:

Applying evidence reliably

Tracking outcomes over time

Creating, updating, and communicating treatment plans

Sharing those plans across allied health teams

Being available outside scheduled appointment times

Not because clinicians don’t care, but because humans are busy, get tired, and work inside imperfect systems.

AI doesn’t get distracted. It doesn’t forget. It doesn’t have a packed caseload or a bad day.

In many ways, AI is simply solving for human inconsistency.

And that raises a more useful question than whether this is “good” or “bad”:

If these systems are increasingly handling the repeatable, scalable parts of care… where does that leave us?

That’s where the real work and opportunity lives.

AI-assisted rehab isn’t mainstream, at least not yet.

Most people aren’t using it. Many haven’t even heard of it. And plenty of clinicians haven’t encountered it.

Despite the headlines and the Facebook ads, this type of care also isn’t reimbursed in the same way traditional allied health is.

Platforms like Hinge Health and Sword Health are typically funded through:

- Specific employer contracts

- Select insurers

- Pilot programs

- Self-insured health plans

- Not universal coverage. Not fee-for-service reimbursement.

And insurers don’t fund experiments out of curiosity. They do it to answer very practical questions:

- Can outcomes be tracked more reliably?

- Can care be delivered with fewer visits?

- Can costs be reduced without unacceptable trade-offs?

- Can access improve for people who wouldn’t otherwise seek care?

- We don’t have final answers yet.

But even in this early, experimental phase, AI-assisted rehab could be reshaping expectations around:

- What meaningful follow-up looks like

- How treatment plans are created, updated, and communicated

- Whether care needs to happen in a clinic

- How available support should be between visits

My current view is that a new reference point is slowly emerging.

One that challenges all of us, clinicians included, to look honestly at how consistently we deliver care that is:

- Evidence-informed

- Trackable

- Goal-oriented

Not occasionally. Not on our best days. But reliably, at scale, inside the systems we actually work in.

For a moment, hold all of these as true:

Some people don’t want to come into a clinic.

Some don’t want, or can’t afford, ongoing out-of-pocket care.

Some are perfectly comfortable seeing different clinicians over time.

And that invites an important reflection:

What do people actually come to me for?

Because many parts of care can now be done very well by systems:

- Consistent, evidence-informed treatment plans

- Reliable outcome tracking and progress monitoring

- Clear instructions, reminders, and follow-up

- Rapid updates and communication across care teams

- For a lot of people, that’s enough and in some cases, better than what humans can deliver consistently under pressure.

So when someone chooses to see me, it’s usually not just for exercises or information.

I think it’s for:

- Feeling seen and understood

- Skilled hands and thoughtful presence

- Calm, specific reassurance when things feel uncertain

- Clinical judgment in the grey areas, when symptoms don’t follow the rules or progress stalls.

Knowing what AI is already good at doesn’t threaten my work. I really do think it helps clarify what matters most.

Perhaps the future isn’t about competing with systems that scale. It’s about leveraging their best parts and being intentional with the parts of practice that can’t.

🗓️ Nanaimo, BC: April 12💻 The online portion is now open. Plenty of live reps coming up in April.

🗓️ Whitehorse, Yukon:

October 24 & 25...two full days of live needling reps.

Pick one low-stakes moment this week and deliberately use technology as a support.

The aim: to show up more fully where it matters.

For example:

Ask a general AI tool to explain a common condition (like low back pain) in plain language, then compare it to how you usually explain it. Keep what works. Rewrite what doesn’t.

Use tech to draft a simple treatment plan or flare-up guide, then edit it until it sounds like you: clear, human, and specific.

Ask an AI tool to suggest outcome measures for a case, then choose the one that best fits your patient’s life, values, and goals.

Or, at a systems level:

Offload documentation and care summaries so you’re not rebuilding the same explanations from scratch each visit.

Use

AI Scribe to generate clear, consistent treatment plans that you can quickly review, refine, and send to every new patient.

Then reflect:

Where did technology save you time or cognitive load?

Where did it raise the baseline of clarity or consistency?

Where did your clinical judgment, presence, or reassurance still matter most?

We're not outsourcing thinking.

Let technology handle what it does well, so you can shine a bit brighter in the parts of practice that only you can do.

If we want to provide care that’s genuinely on par with or better than AI-assisted healthcare, in the future, some things won’t be optional anymore:

- In-person care has to be excellent

Skilled assessment, thoughtful hands-on work (if that's important in your context), and presence.

- Communication has to be crystal clear

No vague plans. No “we’ll see how it goes.”

Course here and here.

- Treatment planning has to be visible and alive

Shared, updated, and understood.

Course here

- Outcome tracking has to be meaningful

Function, confidence, participation and symptom scores.

- Continuity has to extend beyond the session

Flare plans. Progress rules. Clear next steps.

To me this is not about doing more. It’s about bringing more intention right things.

A consistent finding across digital MSK and physiotherapy research is that the biggest gains rarely come from new or novel interventions.

They come from doing the basics ... reliably:

- Being a good communicator and educator

- Leveraging behavioural science

- Structured treatment planning and outcome tracking

- Timely updates and follow-up between visits

AI-assisted systems won’t succeed because they’re smarter clinicians.

They will succeed because they don’t miss important steps.

AI isn’t pointing toward something futuristic, I think of it like holding up a mirror. The mirror highlights what actually matters in care and where, as humans working in busy systems, we may fall down.

Stay nerdy,